What is A/B testing?

Imagine you're a chef trying to perfect a new recipe. Instead of guessing which version tastes better, you serve two slightly different versions to your customers and track their reactions. A/B testing in UX is a research method that works the same way - it lets designers serve different versions of their digital products to users and measure which version works better.

For example, Spotify might test two different ways of showing podcast recommendations. Some users see recommendations based on their listening history, while others see trending podcasts in their favorite categories. By measuring which version gets more people to try new podcasts, they can choose the more effective approach.

What is A/B testing in UX design?

In UX design, A/B testing moves beyond simple preference testing to measure how design changes affect user behavior and business goals. UX A/B testing helps teams understand whether their design changes actually improve the user experience in measurable ways.

Take the example of a meditation app's home screen. The current design shows a large "Start Meditating" button with recommended sessions below. The team wonders if showing users their meditation streak first would motivate more daily practice. Through A/B testing UX design experiments, they can measure whether the streak display actually increases daily meditation rates.

What can designers A/B test?

Designers can test any aspect of the user experience that might affect user behavior and satisfaction. When conducting A/B testing in UX design, teams often focus on testing interface elements that directly impact key user actions.

A video conferencing app might test whether showing camera and microphone controls permanently at the bottom of the screen helps users manage their settings better than hiding these controls in a menu. They measure not just which version users prefer, but which one reduces the frequency of "you're on mute" moments during calls.

What is the difference between usability testing and A/B testing?

While both methods improve user experience, they serve different purposes in UX research. A B testing UX focuses on measuring outcomes - which version performs better according to specific metrics. Usability testing reveals how users think and behave while using your interface.

What is A/B testing in research?

In research contexts, A/B testing combines the rigor of scientific experimentation with the practical needs of product development. What is A/B testing in UX if not a way to bring scientific method to design decisions? Researchers use it to validate design hypotheses with real user data.

For instance, a team working on an online learning platform might hypothesize that showing progress bars in courses will increase completion rates. Through A/B testing UX research, they can measure whether students with progress bars actually complete more lessons than those without.

What is the purpose of A/B testing?

The primary purpose of A/B testing is to remove guesswork from design decisions. Rather than relying on opinion or intuition, teams can use data to guide their choices. How to conduct A/B testing UX properly involves setting clear goals tied to user needs and business objectives.

Another key purpose is risk management. When making significant changes to a successful product, teams need to ensure they're not accidentally making things worse. A/B testing lets them validate changes with a small group before rolling them out widely.

The third crucial purpose is continuous improvement. By regularly testing small changes, teams can gradually optimize their design for better user experience and business results.

What are the requirements for A/B testing?

To run effective tests, teams need several key elements in place. First, they need enough user traffic to gather statistically significant results. A/B testing UX examples often come from large products because they can gather data quickly.

Teams also need clear metrics tied to business goals, tools to implement different versions of their design, and systems to measure user behavior. Most importantly, they need a culture that values data-driven decision making over personal preferences or hierarchy.

How to do A/B testing in UX?

The process of conducting effective A/B tests requires careful planning and systematic execution. Let's walk through each phase of the process, understanding how each step builds toward making informed design decisions.

1. Research and planning

Before launching any test, you need to identify meaningful testing opportunities. Start by diving into your current metrics and pain points. Look for areas where users struggle or abandon their journey. Review user feedback and support tickets to spot patterns of frustration or confusion. Your customer-facing teams often have valuable insights about where users consistently face challenges.

With potential testing areas identified, you'll need to define clear objectives. Rather than vague goals like "improve the experience," set specific, measurable targets. For example, instead of "make signup easier," aim for "increase signup completion rate by 15%." This specificity helps you determine whether your test succeeded and guides your measurement strategy.

Your hypothesis should connect your proposed change to an expected outcome. Write it in a format like: "By changing X to Y, we expect to see Z because [reasoning]." For instance: "By simplifying the signup form to three fields instead of seven, we expect to see a 20% increase in completion rates because users often abandon lengthy forms."

2. Test design

Designing your test variations requires careful consideration. While you might be tempted to test multiple changes at once, resist this urge. Create your Version A (control) and Version B (variant) with only one meaningful difference between them. This discipline ensures you can attribute any performance differences to that specific change.

Think through how you'll measure success. Beyond your primary metric, consider secondary metrics that might be affected. If you're testing a shortened signup form, you'll want to track not just completion rates but also the quality of signups and any impact on customer support inquiries.

3. Implementation

Launching your test requires careful preparation. Calculate how many users you need and how long the test should run to achieve statistical significance. Consider seasonality and external factors that might affect your results. For example, an e-commerce site wouldn't want to test major changes during Black Friday when user behavior differs from normal.

Once your test is live, monitor it closely in the first few hours to catch any technical issues. Keep a log of any external events or changes that might influence your results. This documentation will prove valuable during analysis.

4. Analysis and follow-up interviews

This is where the real insights emerge, through a combination of quantitative data and qualitative research. While your A/B test results will tell you which version performed better, user interviews help you understand the human reasons behind these numbers.

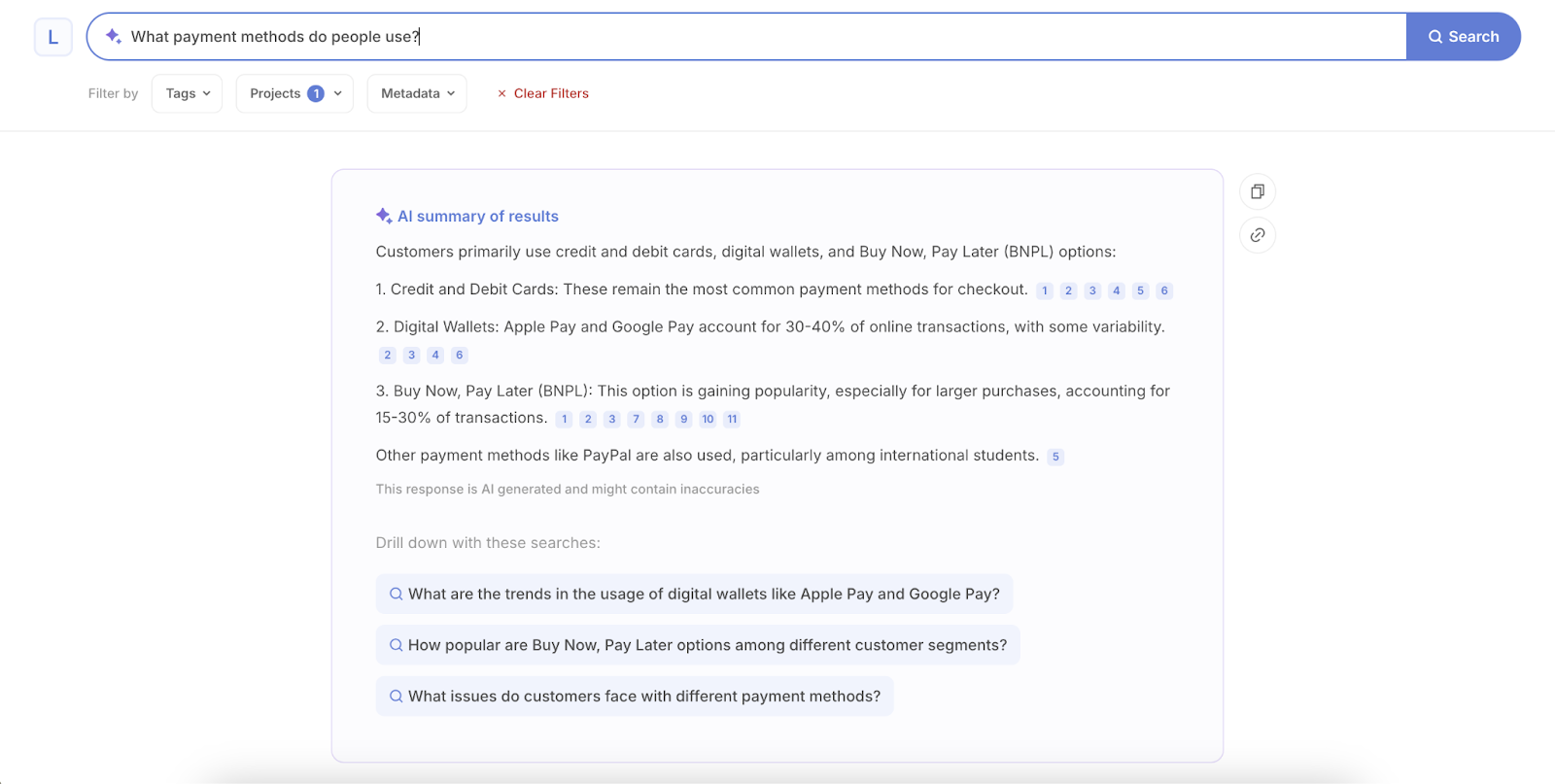

This is where research tools like Looppanel can be invaluable in your research process. After identifying your winning and losing versions, conduct user interviews to dig deeper into the user experience. Looppanel records and transcribes these conversations automatically, allowing you to focus on having meaningful discussions with users rather than taking notes.

For example, if your new checkout flow performed worse than expected, you can conduct in-depth interviews with users who experienced each version. Looppanel’s AI analysis will help you identify patterns in user feedback, highlighting common pain points or concerns that might not be visible in the quantitative data alone.

5. Documentation and sharing

Documenting your findings is also much easier with tools like Looppanel. Instead of writing long reports, you can share actual clips of user feedback that support your conclusions. The platform's automatic summaries help you communicate insights effectively to stakeholders, while its searchable repository ensures valuable insights don't get lost over time.

Create a narrative around your findings that connects the quantitative results with user insights. For instance, rather than just reporting that Version B had a 15% higher conversion rate, you can include clips of users explaining why they found it more intuitive or trustworthy.

6. Implementation and monitoring

When implementing your winning version, treat it as the beginning rather than the end. Continue monitoring metrics and gathering user feedback to ensure the change performs well over time. Conduct periodic check-ins with users, building a longitudinal understanding of how your changes impact the user experience.

The most successful teams use A/B testing as part of a broader research strategy, where each test generates not just results but new questions to explore. This cycle of testing, learning, and diving deeper through user interviews creates a continuous improvement process that leads to better user experiences over time.

Good vs. bad research questions for A/B testing

Good research questions focus on specific, measurable outcomes. Instead of asking "Which design is better?", ask "Which design leads to more users completing their profile setup within the first day?"

Bad questions often try to test too many things at once or lack clear success metrics. "Will redesigning the dashboard improve the user experience?" is too broad and vague to test effectively.

Here's a guide to building the right usability testing questions.

A/B testing UX examples

Let's look at a real-world example from HubSpot Academy that shows how A/B testing can drive meaningful improvements in user engagement and conversion rates.

The challenge

HubSpot Academy faced an interesting challenge with their homepage. Despite having over 55,000 page views, less than 1% of visitors were watching their homepage video. However, when users did watch the video, about half of them watched it all the way through. This suggested that while the content was engaging, something about the page design wasn't encouraging users to start watching.

Support chat logs revealed another issue: users weren't clear about what HubSpot Academy offered, even though it was a free educational resource. The UX team decided to test whether clearer value propositions could boost user engagement.

The Test Setup

The team created three different versions of the homepage:

- The original design served as Version A (control)

- Version B featured vibrant colors, dynamic text animations, and a more engaging visual hierarchy

- Version C used similar colorful elements but added animated images on the right side

The main metric they tracked was conversion rate, while secondary metrics included click-through rates and overall engagement levels.

The learnings

The results were enlightening. Version B outperformed the control by 6%, which might seem small but translated to roughly 375 additional sign-ups every month. Interestingly, Version C, despite having more animations, actually performed 1% worse than the control.

This test revealed several key insights about A/B testing in UX design:

- Small changes can have significant business impact

- More animation doesn't always mean better engagement

- The right balance of visual elements can significantly influence user behavior

- Testing helps avoid assumptions - without testing, the team might have assumed more animation (Version C) would perform better

What made this A/B testing UX example particularly valuable was how it combined quantitative metrics with qualitative user feedback. The team didn't just measure conversions; they also looked at user feedback through chat transcripts to understand the underlying problems they needed to solve.

Best practices

Start with your highest-impact opportunities first. Look for areas where small changes could lead to significant improvements in key metrics. Run tests long enough to gather reliable data - rushing to conclusions can lead to poor decisions.

A/B vs. multivariate testing

While A/B testing compares two versions, multivariate testing examines multiple variables simultaneously. This can help teams find optimal combinations of design elements but requires much larger sample sizes to get reliable results.

A/B split testing

The "split" in split testing refers to how user traffic is divided between versions. Most tests split traffic equally, but teams might adjust the split based on their goals. A risky change might be tested with just 10% of users initially, while a minor tweak could use a 50-50 split.

Remember, successful A/B testing in UX research requires patience, rigor, and a commitment to following the data, even when it challenges our assumptions about what makes good design.

Frequently Asked Questions (FAQs)

What is A/B testing in UX/UI?

A/B testing in UX design helps teams compare two versions of a design to see which one works better. Think of it as running a small experiment where half your users see one design while the other half sees a different version. Teams then measure which version better achieves their goals.

For teams wondering what is A/B testing in UX/UI, it's essentially a way to make design decisions based on real user behavior rather than gut feelings. UX A/B testing removes guesswork from the design process by providing concrete data about what actually works better for users.

What is A/B testing with an example?

A classic example of a/b testing UX comes from Instagram's story feature. They tested whether showing a preview of the next story at the edge of the current story would make users watch more stories. Version A showed just the current story, while Version B showed a peek of the next one. The preview version led to more story views.

Another example of how to conduct a/b testing UX comes from Duolingo. They tested whether showing users their "streak" (consecutive days of practice) prominently would encourage more consistent app use. The version highlighting streaks led to higher daily user retention.

Does Netflix use A/B testing?

Yes, Netflix heavily uses a/b testing UX research to optimize their user experience. They test everything from thumbnail images for shows to the order of content on your home screen. What is a/b testing in ux design for Netflix? It's their way of constantly improving how users discover and enjoy content.

What is an example of a split test?

A notable example of a b testing ux design comes from Booking.com. They tested two different ways to show room availability: Version A displayed "2 rooms left" while Version B showed "Only 2 rooms left at this price." The urgency in Version B significantly increased bookings.

What is the difference between UX research and A/B testing?

While a/b testing ux focuses on measuring specific changes, UX research covers a broader scope of understanding user behavior and needs. A/B testing is one tool in the UX research toolkit, but it can't replace deeper research methods like interviews or usability studies.

What is the difference between user testing and A/B testing?

User testing observes how people interact with your product, while what is a/b testing in ux design measures which version performs better according to specific metrics. Think of user testing as watching someone cook in your kitchen to see where they struggle, while a/b testing ux examples would be measuring whether a new kitchen layout helps people cook faster.

How to test a UX design?

A comprehensive approach to testing UX design combines several methods. Start with user research to understand needs, create prototypes to test concepts, and use a/b testing ux research to validate specific design changes. The key is choosing the right testing method for your specific questions.

Teams should think about how to conduct a/b testing ux as part of their larger testing strategy, not as their only testing method. Good testing often combines multiple approaches to get a complete picture of the user experience.

What are the 3 steps that UX designers do when testing products?

UX designers typically follow a three-step process: First, they research to understand user needs and create hypotheses. Second, they design and implement tests to validate these hypotheses. Third, they analyze results and iterate based on findings. This process helps ensure that testing leads to meaningful improvements.

What is testing in UX design?

Testing in UX design means systematically evaluating how well your product meets user needs. It includes various methods like usability testing, a/b testing ux design, surveys, and analytics analysis. Good testing helps teams make informed decisions about design changes.

When considering what testing in UX design means, think of it as a way to remove assumptions from the design process. Every test should answer specific questions about how users interact with your product.

What is beta testing in UX design?

Beta testing involves releasing a nearly-finished product to a limited audience before the full launch. Unlike a b testing ux, which compares specific changes, beta testing looks at the overall product experience. It helps catch issues that might not appear in more controlled testing environments.

.png)

.svg)